Agent overview

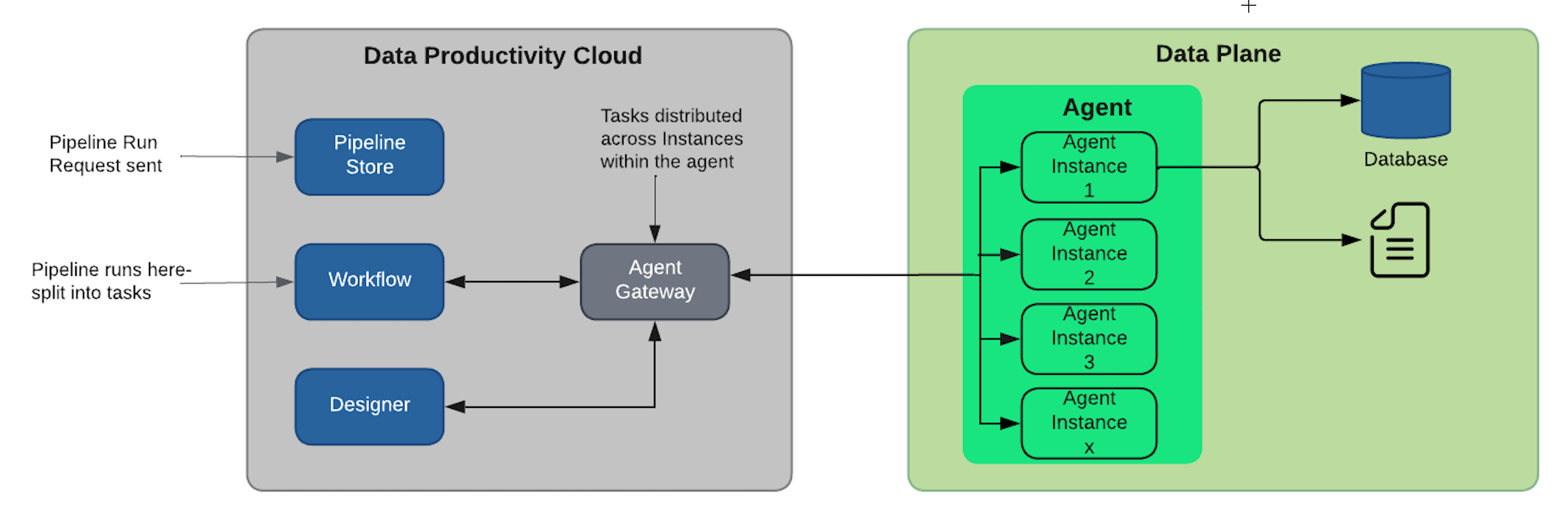

The Matillion agent allows a compatible Matillion ETL job to be executed and scheduled via the Matillion Platform and in a user's data plane by use of an agent—benefiting from enhanced scalability capabilities of this new architecture. It serves as a bridge between the user's data plane and the centralized platform, enabling the execution and scheduling of compatible pipelines.

Here is a summary of the agent's key characteristics:

- Execution and scheduling: The agent enables the execution and scheduling of pipelines within the Data Productivity Cloud. It acts as the engine that carries out the tasks defined in the pipelines.

- Data plane integration: The agent operates within the user's data plane, taking advantage of the enhanced scalability capabilities provided by the new architecture. It ensures efficient task execution and leverages the resources within the data plane.

- Data sovereignty: The separation of the data plane and control plane ensures that data sovereignty is maintained. Users have control and ownership over their data, emphasizing the importance of data governance and security.

- Scalability: The agent can scale up by running multiple instances concurrently, allowing for increased workload handling based on the user's requirements. Although the scaling process is currently manual, there are plans to enhance scalability features in the future.

Architecture

The agent is required for Designer to schedule and execute the pipelines you create. While agents can be installed manually on your cloud platform, a Matillion hosted agent is provided for you when creating your first Designer project and can be seen from the Manage Agents page in the hub. Thus, you don't need to install your own agent if you don't want to.

What is a data plane?

A data plane refers to an abstract concept that represents an execution environment. This execution environment, such as AWS Fargate, is used in conjunction with an agent provided by Matillion as a Docker image.

The data plane serves as the infrastructure or environment where the agent instances operate. The agent instances, when deployed within the data plane, collectively form the agent. Each agent instance is responsible for executing individual pipeline tasks.

These pipeline tasks typically involve interactions with various data sources, including a data warehouse. The data plane provides the necessary resources and infrastructure for the agent instances to execute the assigned pipeline tasks and perform data-related operations.

What is the agent?

The agent is a software component responsible for executing the pipeline tasks within the specified environment. It serves as the execution engine for the pipelines and interacts with the agent gateway to receive the pipeline tasks that need to be executed. The agent communicates with the agent gateway using an egress-only method, meaning it can send requests and receive responses but cannot accept incoming connections.

The pipeline tasks that are sent to the agent originate from the Software-as-a-Service (SaaS) platform. These tasks define the specific operations and transformations to be performed on the data within the pipeline.

What is the agent gateway?

The agent gateway is a communication portal or interface that facilitates the interaction between the agents and the software-as-a-service (SaaS) platform. It acts as a bridge for communication between the agents and the central platform.

Agents use the agent gateway to communicate with the SaaS platform in a request-response manner. They regularly poll the agent gateway to check for new work tasks or instructions that need to be executed. By polling, agents proactively enquire whether there are any pending tasks for them to handle.

Once the agents receive the pipeline tasks from the SaaS platform via the agent gateway, they proceed to execute these tasks within their respective data planes. After completing the tasks, the agents send back responses or results to the agent gateway, providing updates on the status of the executed work tasks.

What is the agent manager?

The agent manager is an application or tool that facilitates the creation, configuration, and management of agents within the Data Productivity Cloud ecosystem. It is typically accessed through the Hub, which serves as a centralized platform for managing various aspects of the data pipelines.

With the agent manager, users can create and define configurations for installing and deploying agents. This includes specifying settings such as the agent image, environment variables, resource allocation, and any other required parameters. The agent manager provides a user-friendly interface or set of commands to simplify the process of configuring and setting up agents.

Additionally, the agent manager may offer functionality for managing agents in compatible environments like AWS ECS Fargate. This can include features such as automatic updates, where the agent manager handles the process of updating agents to newer versions seamlessly. This ensures that agents deployed in compatible environments remain up to date with the latest features, bug fixes, and security patches.

Considerations

The below considerations provide recommendations and limitations for using the Data Productivity Cloud and agents:

- Creation of an agent and necessary resources in AWS is required to run pipelines using the Data Productivity Cloud.

- External storage, such as AWS Secrets Manager, is recommended for storing credentials.

- Recreating OAuth entries, such as for Salesforce Query, may be necessary.

- Running production workloads and workloads with sensitive data should be avoided during the private preview period of Unlimited Scale.

Video

Help us improve

The agent is still in development. Please get in touch with any problems you encounter while using this product.