Connecting to external services securely🔗

Overview

Matillion ETL uses query components or "connectors" to access external services—including Google, Salesforce, Dynamics 365 and many more. This document will explain how Matillion ensures the data from these services is accessed, staged and used securely.

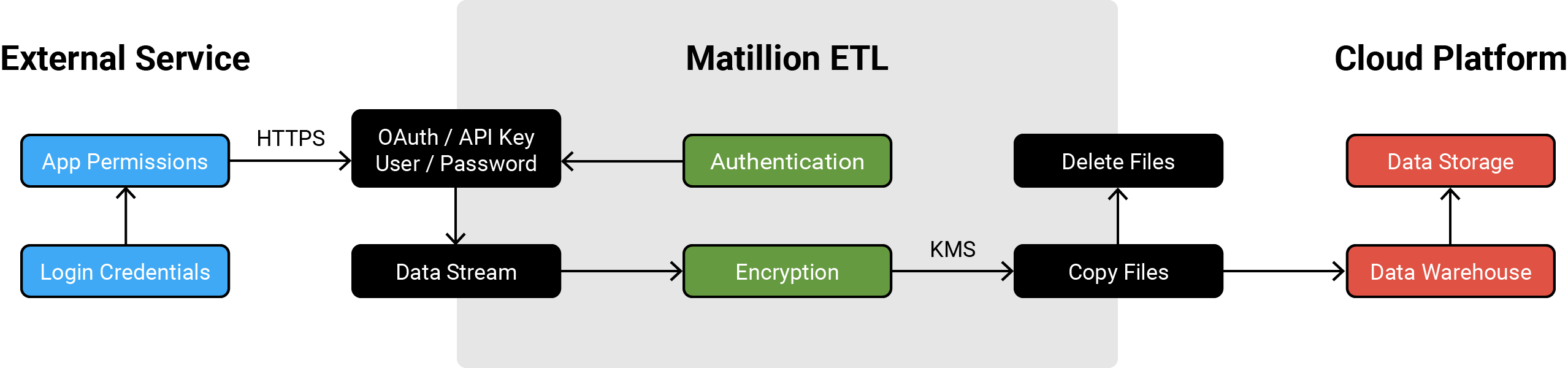

Example of a Secure Connection to External Services on AWS

Accessing External Services

Matillion Connectors

Matillion ETL has a wide range of "out of the box" connectors linked to external services, with more being added periodically.

When accessing one of these external services via a Matillion ETL connector, the connector will first need to be authenticated by the service using a username and password, OAuth, API key or a combination of these. Admin or developer level permissions will usually be required to grant Matillion ETL access to any data associated with the service account.

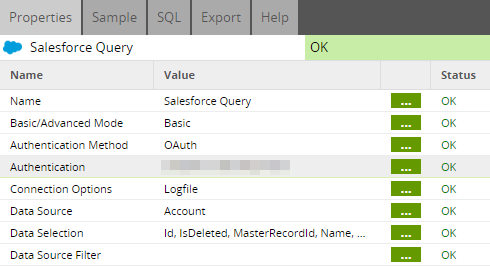

Once configured, these authentication details can then be stored in the connector via the Properties panel (where details are immediately obfuscated), or in the Matillion Password Manager (where details can be encoded or encrypted, please refer to Manage Passwords for more details).

Please Note

- By default, Matillion ETL connectors will only request minimum level of access required to function properly. Any further access or permissions must thereafter be configured through the external service.

- To learn how to authenticate a connector, please refer to Manage OAuth and / or the document associated with the relevant connector.

Connector Properties Panel

Transferring and Encrypting Data

Matillion ETL connectors use either Matillion's own framework or JDBC (Java Database Connectivity) drivers to fetch the data from a external service. These two connector types work as follows:

- Connectors using JDBC drivers: models data into tabular format, then write it to a file—formatted into CSV for Redshift, Snowflake and Synapse, or delimited JSON for BigQuery

- Connectors using Matillion's framework: logs a HTTPS request with the service, then stages the data in the relevant cloud data warehouse

Once the data has been fetched from the service, it is temporarily stored or "staged" in the relevant cloud data warehouse's data storage—S3 for AWS, GCS for GCP and Blob Storage for Azure. Please refer to Environments for more details on setting up a connection to a cloud data warehouse.

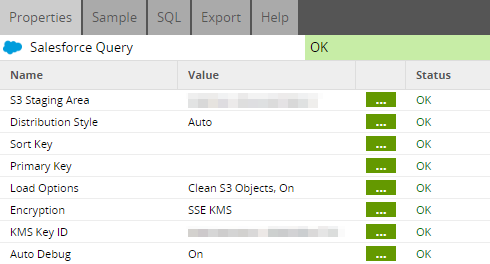

AWS also allows for this to be encrypted inside the S3 bucket. This can be setup via the Encryption input in the connector's Properties panel. Options include no encryption, encrypting data according to a key stored on KMS or encrypting data according to a key stored on an S3 bucket.

Lastly, a copy command is then issued to the data warehouse and (depending on the connector's configuration) the staged files may then be deleted from the data warehouse.

Please Note

- No sensitive data or passwords are ever copied to a cloud data warehouse or data storage.

- All staged files may also be removed or "cleaned" from data storage after being copied into Matillion ETL. This can be done via the Load Options input in the connector's Properties panel.

- JDBC drivers are used to copy data to Redshift, Snowflake or Synapse, while BigQuery's Java library is used to copy data to BigQuery.

Staging, Cleaning, Encrypting and Logging

Logging Activity

Connectors using Matillion's framework will automatically push activity to the Server Log, which can be downloaded via Admin → Download Server Log. No sensitive data is logged, merely the request made and the status code returned removing passwords.

Connector's using JDBC drivers do not automatically log activity to Matillion ETL and will need to be configured. This can be done by selecting On for the Auto Debug input. This will reveal the Debug Level input, which is set to 3 by default (thereafter, please refer to the document associated with the relevant connector for an explanantion of what each level logs)

Please Note

- After setting Auto Debug to "On", activity will be logged in the Tasks panel..

- Alternatively, activity may also be saved to an external file. The location of this file can be specified via the Connection Options input in the connector's Properties panel, using the "logfile" parameter.